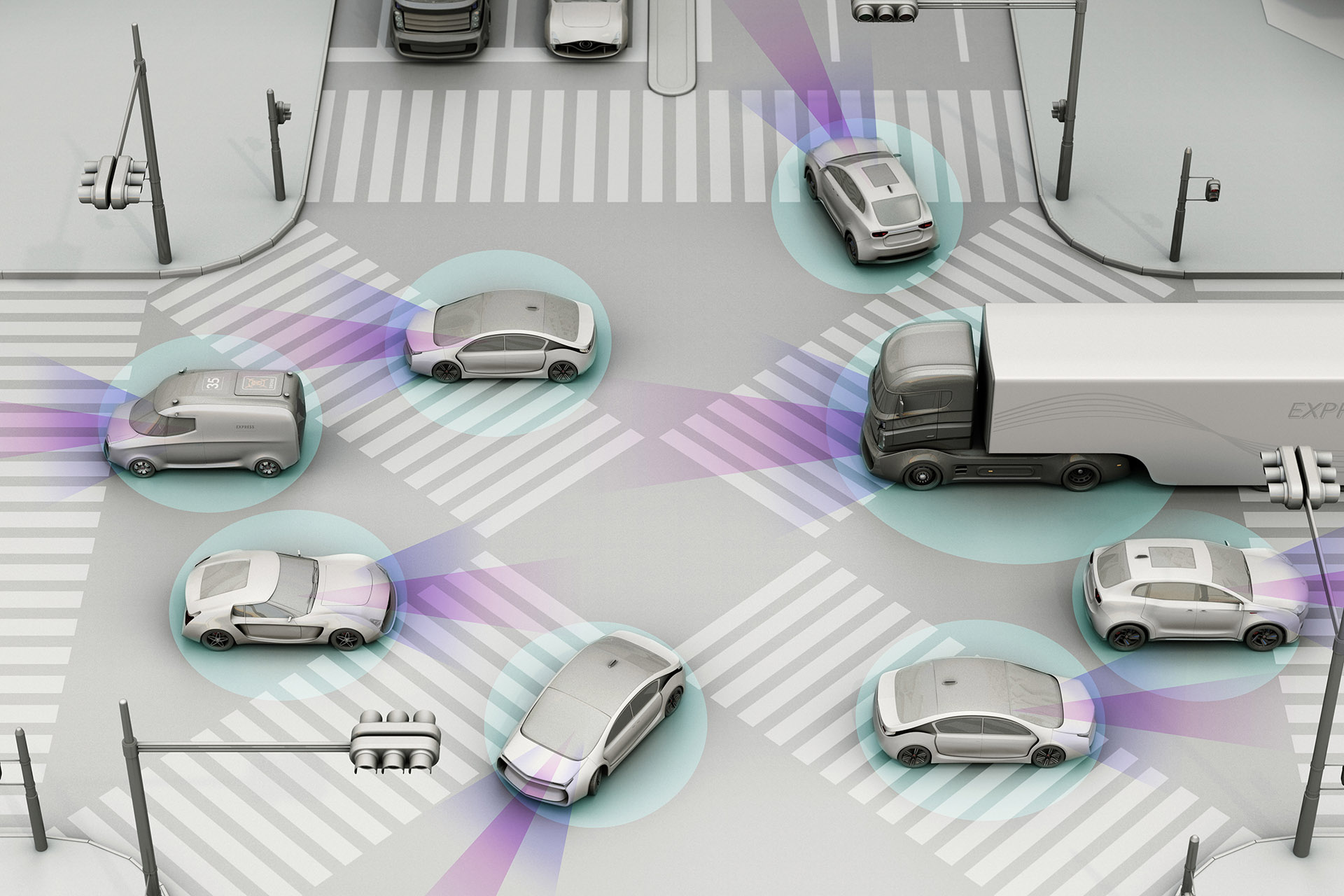

Self-driving cars. Robot cars. Autonomous vehicles.

The biggest hype in the automotive world today.

Who will be the first to put them safely on the city streets?

The FABULOS project is already piloting autonomous buses in five European countries. Automakers like General Motors, Uber, Daimler and Tesla are pledging to release thousands of self-driving taxis – “robotaxis” – in the next little while.

The only caveat is – when.

As you may have guessed, developing sentient transport for public use might be the only time you can say – it’s rocket science.

“It’s literally like putting somebody on the moon,” said Gary Silberg, a partner at KPMG. “It’s that complex.”

You need to build and maintain rich, detailed 3D maps. Deploy very complex sensors that will have to be trained with AI to detect and react to objects with impeccable accuracy.

Also, driving requires intricate social interactions – like a cyclist waving to make a turn or a police officer re-routing cars around an accident — which are still hard for robots to interpret.

“There’s a long way to go in all of these areas,” says Edwin Olson from the University of Michigan. “And reliability is the biggest challenge of all. Humans aren’t perfect, but we’re amazingly good drivers when you think about it, with 100 million miles driven for every fatality. The reality is that a robot system has to perform at least at that level, and getting all these weird interactions right can make the difference between a fatality every 100 million miles and a fatality every 1 million miles.”

The Challengers

We at Powerfleet (formerly Fleet Complete) are no strangers to the self-driving hype and partake in projects that involve sponsoring, building and testing autonomous vehicles.

So we like to get our information straight from the horse’s mouth – the engineers.

This past July, 2020, we sat down (via Zoom) with a student engineering team from University of Toronto (aUToronto), who are gunning to the finish line in the AutoDrive Challenge.

The AutoDrive Challenge™ is a 4-year autonomous vehicle collegiate competition in the U.S. that started back in 2018. The goal for the student teams is to develop a fully autonomous passenger vehicle capable of self-driving in complex urban environments. The tasks for 2020 encompass intersection handling, pedestrian avoidance, and smart navigation through city streets.

Today, aUToronto team is the #1 contender to win the final 4th leg of the competition in 2021.

Why?

Because they’ve won in each stage of the competition for the past three years! So, in our books, they have some serious ‘street cred’.

When we asked them what their secret ingredient was, they just said – a very talented, focused team, great faculty advisors, and access to sponsors like Powerfleet (formerly Fleet Complete) that provide the funds to purchase state-of-the-art technology.

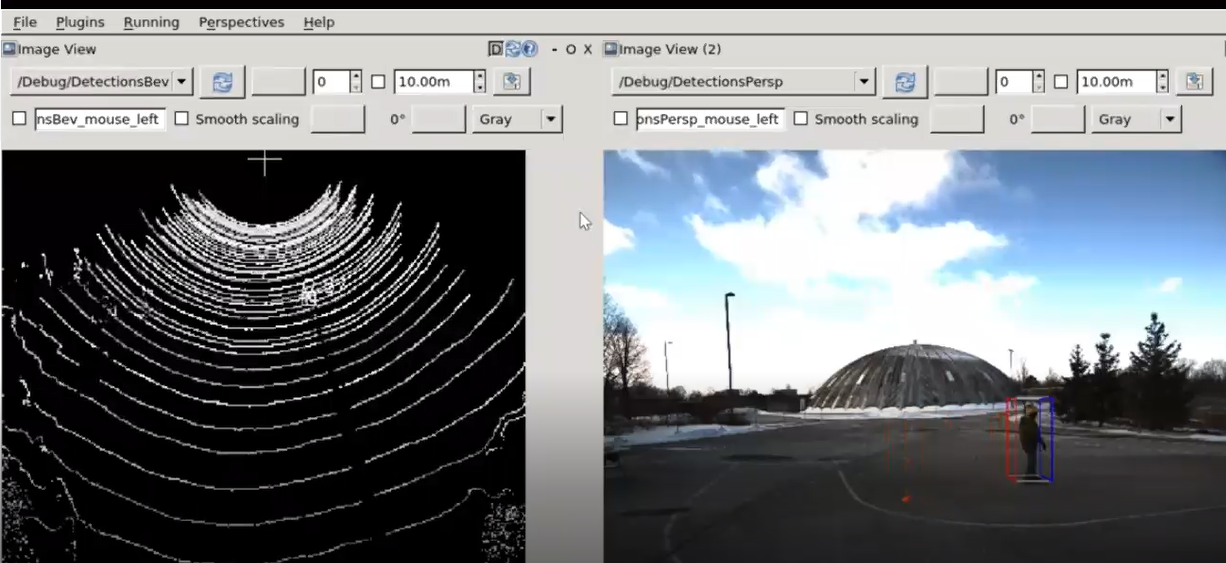

Vehicle’s “Perception”

For the vehicle to “see” objects in 3D, you need a LIDAR (Light Detection and Ranging) sensor to remotely examine surroundings using laser beams. As the laser beams bounce off objects in the vicinity, the sensor measures how far they are and constructs a three-dimensional analogue. The aUToronto team uses Velodyne HDL-64 3D LIDAR sensor – a very expensive piece of equipment – that creates denser detection and can perceive more depth versus cheaper LIDAR sensor options.

The LIDAR-based neural network seems like a fairly straightforward concept to grasp…sort of…but what about changing colours in the traffic light or reading STOP signs?

The answer is classical computer vision, using video cameras, and image-based neural networks that take a lot of cumulative data and machine-learning to build out.

Ziyad Edher, Lead for Traffic Lights and Signs at aUToronto, explains: “In essence, you can think of it as a little brain that tries to detect our specific objects of interest, which in the case are traffic lights and traffic signs.”

“As with most of these deep learning systems,” he highlights, “we need a whole lot of data to actually get it to run and train properly. And really these detection systems are only as good as the data you feed them.”

“Although there have been a whole lot of companies that are trying to do the same task of traffic light and traffic sign detection, a lot of them don’t make their data sets public, which is unfortunate but that’s a sad reality. So we went ahead to collect our own high-quality data of traffic lights and of traffic signs and ended up with about 35,000 images, labelled with traffic lights that we can use to train our model.”

Source: aUToronto

Source: aUToronto

Powerfleet (formerly Fleet Complete): What is the biggest challenge in recognizing the difference between the colours on a traffic light?

aUToronto: That is a question that’s been heavily researched to understand what makes detection systems better, and what makes them robust to all kinds of conditions. One of the main challenges we face is the robustness to different lighting conditions and different backgrounds.

The data set we collected was based partially on the data we went out with the car to collect, and a little bit based on the YouTube videos of people driving around random US cities that we went ahead and labelled using Scale AI.

So the biggest challenge is basically that diversity of scenarios. If we just collect our data from one particular source, then we’re most probably will be able to perform only in that particular type of scenario, so we tried to diversify our data as much as possible to mitigate that, as well as introduce some underlying systems within our architecture that tries to mitigate that.

FC: Right, because there is the weather variable – sunny, cloudy…

aUTo: Exactly. So, what we do is augment our data sets in certain ways to simulate different conditions, such as fog or varied lighting, even after we collect the data that might not be in those conditions.

FC: Do you use any special sensors on cameras?

aUTo: We use just raw video feed, although we do use two cameras – one with a short-range lens and one with long-range lens to increase our depth range.

FC: Do you guys think you’ll make this high-quality data public after you finish the competition?

aUTo: We’ll definitely release our data sets once we finish the competition.

We’ve already released our pedestrian detection data set last year.

We have quite a lot of pedestrian data available. It’s able to pick up pedestrians quite far away. You can also plot the relative distance of how far away they are from your car, and how far the object will be moving. That information is critical for the planner to react properly.

Source: aUToronto

Source: aUToronto

FC: What if you are driving at night, would that be a problem for the LIDAR?

aUTo: For now in our system we are not doing any sensor fusion – it is still a bit of a challenge within the research community. Obviously, multi-modality should help, but the issue is – when you have data coming from multiple sensors, how do you know which sensor is giving you the best results? For example, when it’s snowing or raining, cameras will do fine, but LIDAR will completely fail. So, this is a difficult question.

Navigation & Planning Architecture

aUTo: So for this year in particular, we want to implement a more robust navigation algorithm, which is the lattice graph.

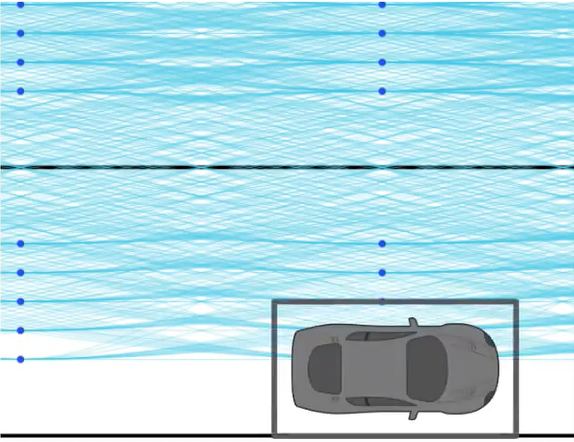

Source: aUToronto

aUTo: So this lattice graph is basically a really large amount of points and edges that is pre-generated for every road segment. And the key thing about these points and edges is that every point represents a position that the vehicle can possibly be in, and every edge represents a path that the vehicle is viable to travel on.

Therefore, if we take any path on this pre-generated lattice, assuming there are no other obstacles, the vehicle should be able to navigate successfully along this path.

Essentially, this means that we will never create a path during the competition that our vehicle is unable to travel on. And this helps eliminate a lot of the problems with, for example, control while capturing unknown obstacles that result in weird vehicle behavior.

Instead, we have everything fixed onto a predefined graph that we could not only use during a competition, but also use during a simulation to check that, for example, all paths are viable for the vehicle.

So, to get a brief illustration of how we use this graph to deal with, say, an obstacle, a roadside, or intersection, imagine that if you see a car covering part of the lattice graph, we create a bounding box for the car, and then any node or edge inside the path inside the bounding box we determine as unable to drive for our car, because it would collide or get too close to the obstacle and we simply remove those. And use the rest of the edges and nodes for our vehicle traversal.

FC: You find you encounter surprise objects during the competition?

aUTo: Yeah, it’s actually the part where they definitely try to get you during the competition. Just last year, we had an unfortunate encounter with a surprise obstacle that we were unable to avoid, and it resulted in an interesting collision.

So this is what we’re trying to work out this year.

FC: And how fast does the vehicle go?

aUTo: The fastest speed that they allow is 25 miles per hour, that’s about 40 kilometers. We usually try to go a bit slower than that. It, in general, depends on the shape of the road, and if there are any speed limit signs on the road at that time.

If the road is, for example, curved, we’ll go slower in our car.

Dynamic Obstacle Handling

aUTo: So, one important capability of our detection system is that our vehicle must be able to predict the motion of objects around it to ensure a safe maneuver. We mentioned that there was an unfortunate collision with a “deer” last year, because we did not expect that the deer would rush out from the side of the road and, at that time, our vehicle was not able to predict the motion of the deer. So the deer crashed into the side of the car.

So this year, we tried to make this motion prediction system. Our tracker will estimate the velocity of objects around us, and then, based on this, predict the motion.

FC: The prediction system, is that done through, again, collating a lot of diverse data?

aUTo: We did it in a quite simple way. For example, if we detect the same object in consecutive frames, we can calculate the difference in position of the object. So we will be able to know how fast objects are moving in the real world.

And then we simply do a linear prediction, where we assume that all objects are moving in a straight line. There are more complicated ways to make predictions, but we see that a simple linear prediction is sufficient.

Simulation & Testing Tools

aUTo: In year two, we developed a lot of simulations, which were very useful this year with the COVID situation. We have different simulators, ranging from abstract to more realistic ones. The abstract ones we use to create different scenarios on the road, so we can use this simulator to verify our algorithms.

Other ones we use to simulate different weather and lighting conditions, like with the traffic lights.

We can put our car in a simulator and it collects a lot of data sets. Because it all runs on a computer, we don’t actually need to get into the car — just run it through the simulator. Our car doesn’t know the difference between a real world scenario and a spoof.

This makes our testing much faster and convenient, especially during the current situation.

FC: Fascinating. Thank you for this. And what’s it like to be a part of the team to beat? How does it feel? You guys have won for two years in a row.

aUTo: It feels very satisfying to see how far we’ve come. Building this car from scratch. Making all the design decisions. And then go to the competition and win. It’s really fulfilling.

It’s really the experience, going through the A to Z. Being able to be involved in something like this. We’re very lucky to be part of the team.

FC: We wish you all the best of luck and we’ll be following your successes closely. Thank you for all the great insights and your time.